🚀 Fast, Scalable, Cost-Efficient Data Engineering—Done Right.

⚡ Slow pipelines, sky-high cloud bills, and endless troubleshooting?

✅ Not anymore. I build, optimize, and scale data platforms that run fast, stay reliable, and keep costs under control—without the bloated overhead of a big consulting firm.

💰 Let’s fix your data pipelines & slash your cloud costs —schedule your free consultation today!

Common Data Engineering headaches

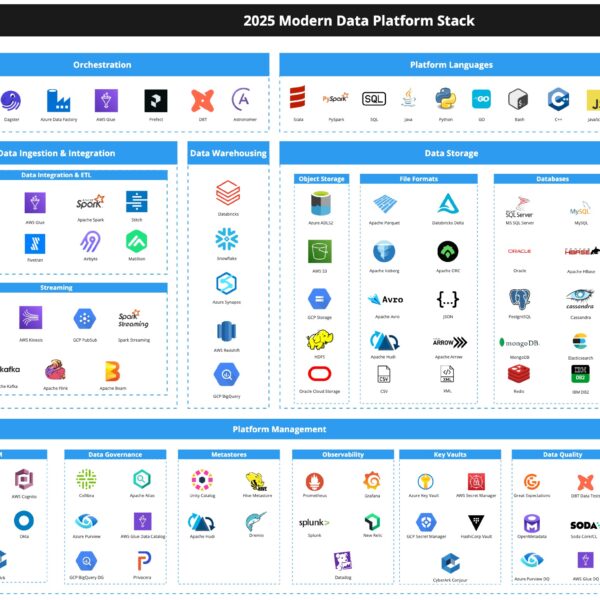

Do the endless technology choices make it impossible to move forward?

Are your ETL pipelines slow, failing, and unreliable?

Are your cloud costs keep skyrocketing, and you’re not sure why?

Are you drowning in vendor pitches, but no one actually takes ownership?

✅ I cut through the noise and help you choose the right tools for your needs, so you don’t waste time or money on the wrong stack.

✅ I build blazing-fast, reliable, and scalable ETL pipelines with Databricks, Spark, and Python—so your data is always fresh and your team can focus on real work.

✅ I optimize your data infrastructure to slash costs and improve performance—whether it’s tuning Spark jobs, right-sizing clusters, or eliminating waste.

✅ I work hands-on to deliver real, production-grade solutions. No handwaving, no “checking the boxes”—just real results you can rely on.

Services

Zero-to-Data Platform

Setting up a full Data Platform

Completely new to data? No problem. I build and configure a scalable, secure, and cost-effective Data workspaces for your company. Fully managed, with best practices built in from day one. Data Laka? Data Warehouse? Database? No problem, I got you covered!

✅ Data Platform – Fully configured Dev, Test, and Prod workspaces with KeyVaults and Data Storages.

✅ IAM & Security – Secure identity & access management for your cloud platform.

✅ Optimized Compute & Cost Control – Avoid hidden cloud costs from day one.

Blazing-Fast ETLs

Building batch & streaming pipelines

Need high-quality ETL pipelines built fast? I specialize in cost-efficient, high-performance ETL solutions in Databricks, Snowflake, Apache Spark, Python, and SQL. Whether you’re starting fresh or migrating from another system, I’ll ensure your data pipelines are reliable, scalable, and cost-effective.

✅ Custom ETL Pipelines – Batch or streaming, tailored for your business needs.

✅ Optimized for Performance – No memory bottlenecks data spills or slow queries – cost-effective and efficient.

✅ Pipeline Orchestration – leverage tools like Apache Airflow, Azure ADF, AWS Glue, Dagster or native Databricks jobs for scheduling & automation.

Companies I’ve worked with

About

Owner of Cloud Native Consulting

Hi, I’m Filip. Since 2017, I’ve been helping businesses—including Fortune 500 companies—design, deliver, and optimize their data infrastructure. I focus on solving technical problems so businesses can focus on what matters: serving their clients.

I do this by understanding your goals and working together to achieve them, with clear, actionable steps.

What sets me apart?

- No middlemen: No inflated costs for sales teams or junior engineers.

- Senior-level expertise: I’m hands-on from start to finish.

- Full transparency: Clear solutions, no jargon, no empty promises.

You’ll have full visibility and control, and I put my name on the line. My mission is to empower your team to deliver results independently—so you don’t need me anymore.

Let’s work together to take your data to the next level.

Let’s work together

on your next tech project

FILIP PASTUSZKA

Senior Data Engineering Consultant

Owner of Cloud Native Consulting

filip@cloudnativeconsulting.nl