Some say an expert is someone who has made all the mistakes in the book. While I can’t claim to have made every mistake possible, I’ve certainly made enough to recognize the patterns.

If your data engineering team is constantly busy yet struggling to make real progress, chances are you’re dealing with one (or several) of these productivity killers. Let’s break them down — and more importantly, let’s talk about how we try to fix them.

1. A Messy, Inefficient Development Workflow

If your team doesn’t have a well-thought-out development workflow, chaos is inevitable. Here’s what that might look like:

- Manually copying code instead of automating deployments

- Spinning up new environments by hand instead of using infrastructure as code

- A lack of clear dev → qa/test/preprod → prod flow

- Endless manual steps that waste time and introduce errors

How to fix it: Standardize deployments with CI/CD pipelines. Automate infrastructure provisioning. Define a clear development process that minimizes unnecessary manual work.

2. Choosing Low-Code Tools That Slow Engineers Down

Low-code platforms promise speed, but they often create more problems than they solve:

- They introduce system limitations that require frustrating workarounds

- Merge conflicts and unreadable code become the norm

- Vendor lock-in makes migration a nightmare

- Testing and version control (Git-ability) take a hit

How to fix it: Before choosing a low-code tool, ask: Will this empower engineers or slow them down? If you have a strong engineering team, developer-friendly solutions are almost always a better choice.

3. Poor Developer Experience

A slow, painful development experience kills productivity. Common culprits include:

- Outdated or underpowered laptops — seriously — this is one of the best investment you can make when you hire someone

- Excessive bureaucracy (constant approval requests, compliance forms, etc.)

- Lack of control over development environments

How to fix it: Invest in good hardware. Reduce unnecessary friction in the development process. Give engineers the autonomy they need to do their job efficiently.

4. No Isolated Environments for Development

If your development changes impact others in real-time, you’re setting your team up for trouble. When developers are stepping on each other’s toes, productivity drops fast.

How to fix it: Use feature branches, containerized environments, or virtualized workspaces to ensure developers can work independently without breaking things for others.

5. Lack of Tools to Speed Up Development

Without the right tools, developers waste time on repetitive tasks that should be automated. Examples include:

- No automated linting, pre-commit hooks, or style enforcement

- No IDE plugins to enhance productivity

- No package managers to streamline dependencies

How to fix it: Set up tools that improve workflow efficiency. Some great options:

- Python: Black, isort, poetry, pylint

- JavaScript: ESLint, Prettier, Husky

- Git: Pre-commit hooks for enforcing standards automatically

6. No Upstream Data Testing

If you only find out something is broken when a stakeholder calls, you’re already too late. Not catching upstream data issues means you’re constantly reacting instead of preventing problems.

How to fix it: Implement tools like DBT tests, Great Expectations, or SQLMesh to catch issues before they cascade downstream.

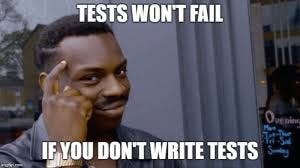

7. Ignoring Data Quality Checks

Data quality problems — like missing values, duplicate records, or incorrect joins — lead to bad decisions. If data engineers aren’t proactively checking data quality, expect trouble.

How to fix it: Introduce automated checks for:

- Uniqueness (e.g., duplicate IDs)

- Completeness (e.g., missing values)

- Consistency (e.g., data types, expected ranges)

- Unit Test your data code — this is challengine and I am yet to find a good way to do that although DBT and SQLMesh offer promissing solutions

Tools like DBT, Great Expectations, and SQLMesh can help enforce these rules.

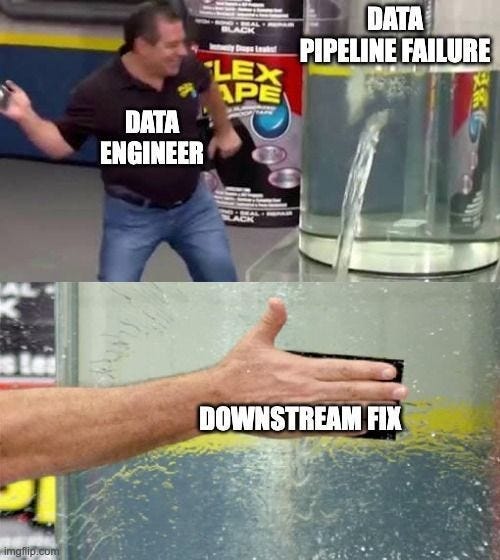

8. Frequent Pipeline Failures & Firefighting

If your team spends more time fixing broken pipelines than building new features, something is wrong. Common causes include:

- Upstream data changes breaking workflows

- Fragile pipeline logic that’s prone to errors

- Poor infrastructure maintenance

How to fix it:

- Monitor pipelines proactively with alerts and dashboards

- Write robust, resilient pipeline logic

- Invest in infrastructure automation and monitoring

9. Reckless Logic Changes Without Impact Assessment

Quick and dirty fixes might work now, but they can create downstream chaos. Changing logic without verifying its impact is a recipe for disaster.

How to fix it: Use tools like DBT’s or Datafold’s Data Diff to compare before-and-after results before deploying changes.

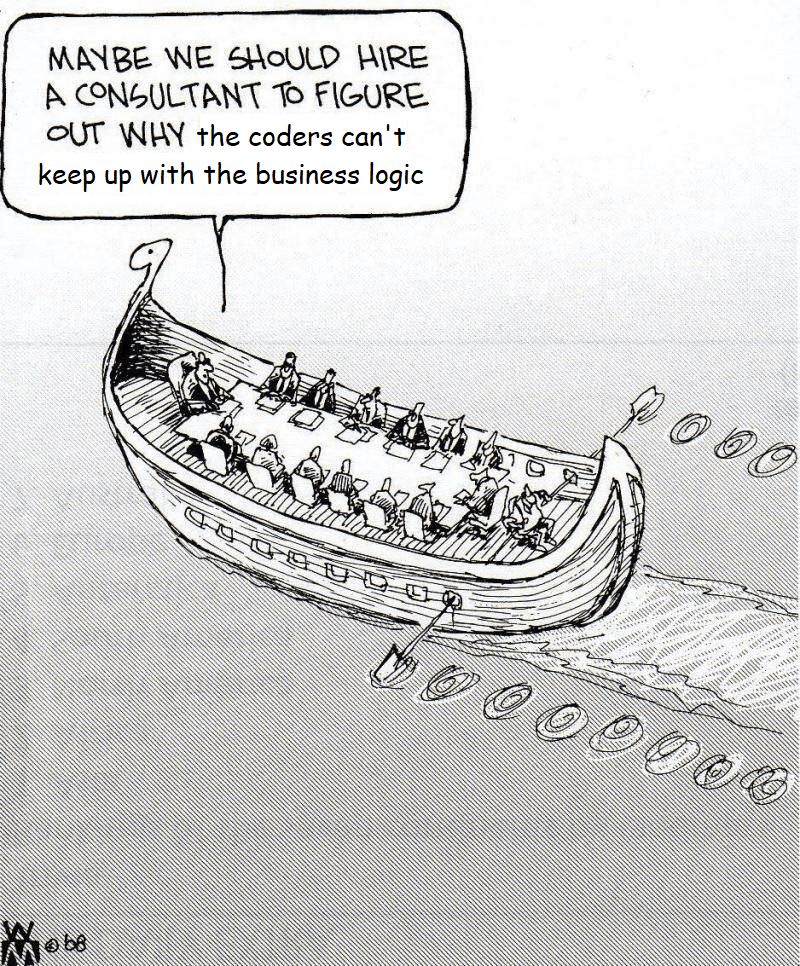

10. Scattered Business Logic

Business logic hidden across multiple places — SQL queries, pipeline scripts, API calls — creates confusion and maintenance nightmares.

How to fix it: Follow software engineering best practices:

- Keep logic centralized and modular

- Use version-controlled transformations (e.g., DBT models)

- Apply DRY (Don’t Repeat Yourself) principles

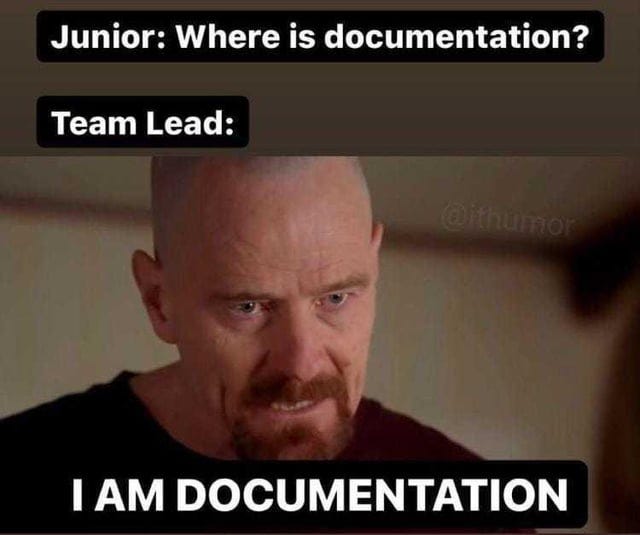

Bonus: Skipping Documentation

You don’t have to document everything, but if your team’s response to a question is “Go ask [insert tech lead’s name],” you have a problem.

How to fix it: Make documentation a habit. Even a simple README or an inline comment can save hours of confusion.

Final Thoughts

The good news? Most of these issues have solutions. The bad news? Well… you have to actually implement them.

What are the biggest productivity killers you’ve seen in data engineering? Let’s vent in the comments! 🚀